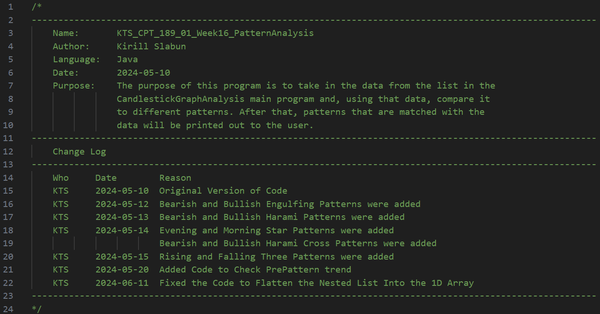

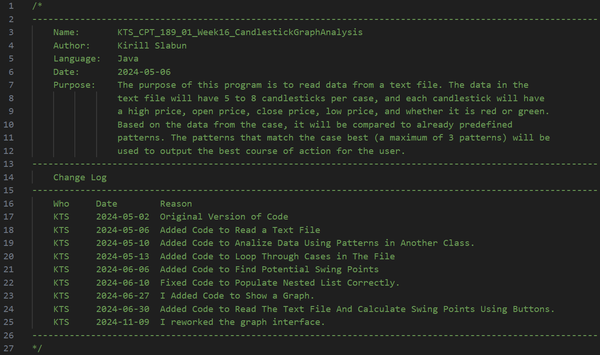

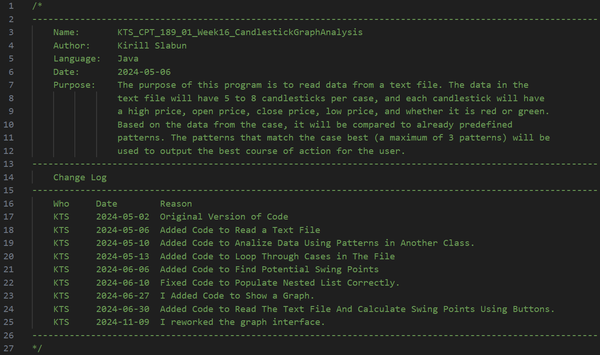

Heuristic analysis of financial data was my first application. The program is far from perfect because, when I created it, I was also learning Java and had only one Python course as my coding experience. What also makes this project different from all the others is that I created it entirely without the assistance of Large Language Models. This project served as the basis for all subsequent projects, and some parts were reused, such as the pattern detection, which was implemented in Python within the Transformer Trading Agent project.

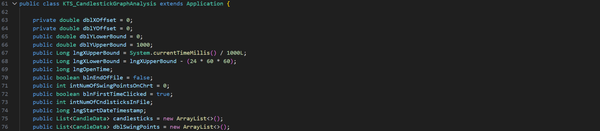

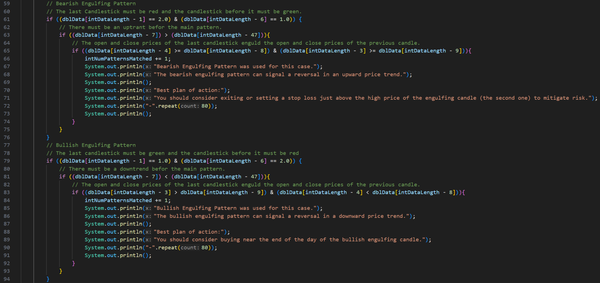

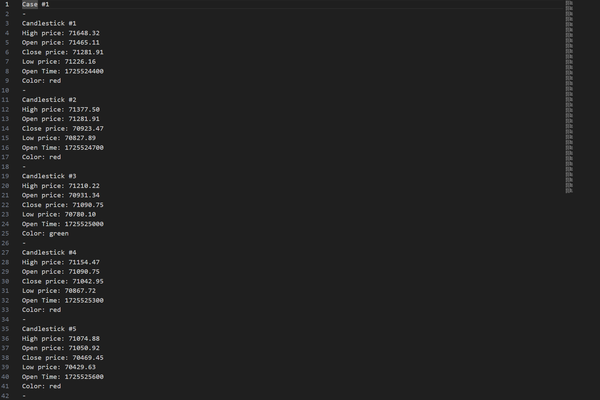

Initially, this project was part of my coursework, with my main objective being to extract candlestick data from a text file and identify trading patterns such as Engulfing, Harami, Evening Star, Morning Star, Harami Cross, Rising Three, and Falling Three. The project also required using the JavaFX library to create an interactive UI. I also had to follow strict coding naming conventions (camelCase), formatting, commenting, and best practices, which I follow to this day.

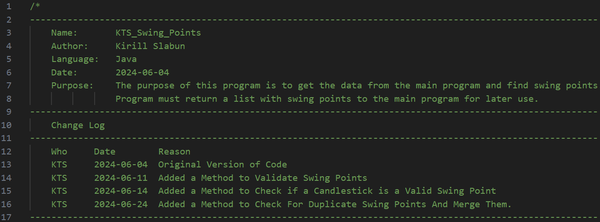

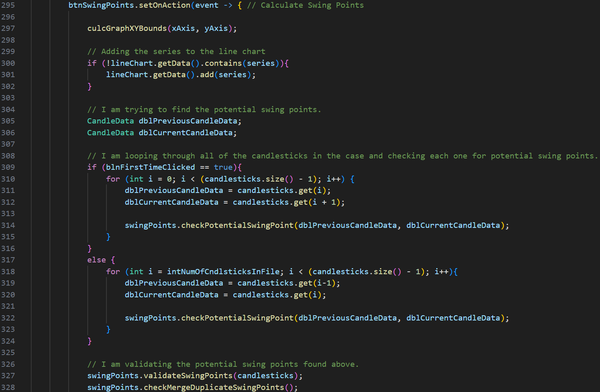

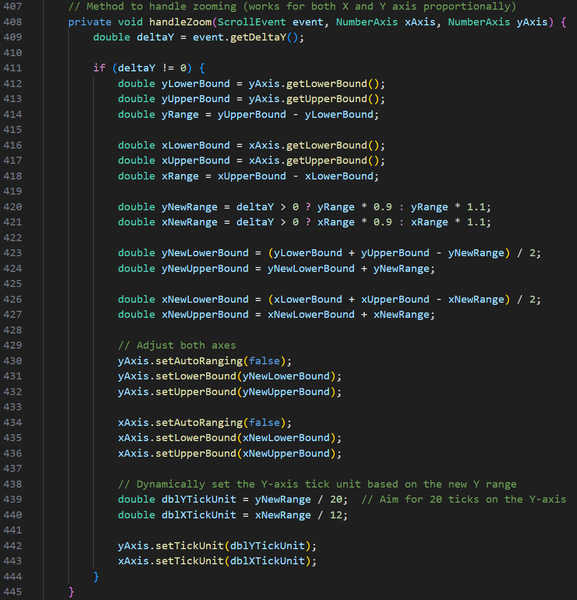

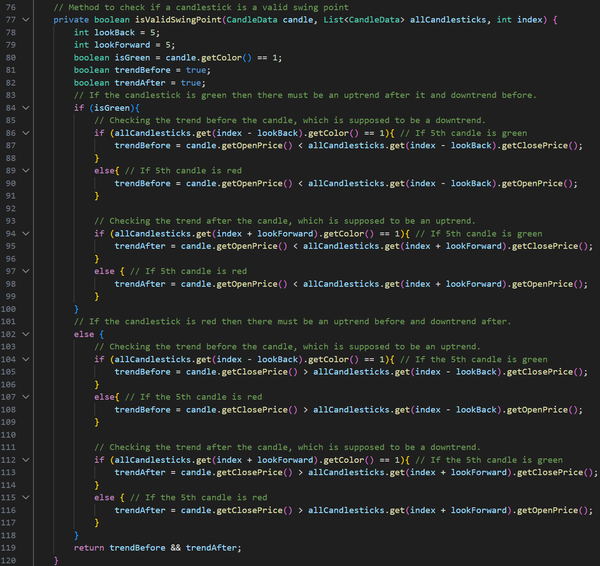

After I had finished the course, I didn’t stop developing my program. I later implemented my own swing-point detection algorithm that considers 5 candlesticks before and after the possible swing point. If there is an uptrend on 5 candlesticks before and a downtrend on 5 candlesticks after (high swing point), or a downtrend on 5 candlesticks before and an uptrend on 5 candlesticks after (low swing point), the candlestick would be marked as a swing point. Then the swing point had to go through another algorithm that slides a window over all the candlesticks, and if there were more than one same-type swing point within the window, only the highest or the lowest swing point would be left, depending on the type of swing points in the window.

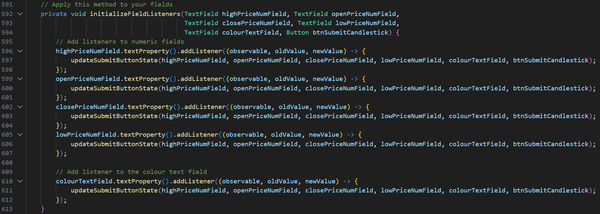

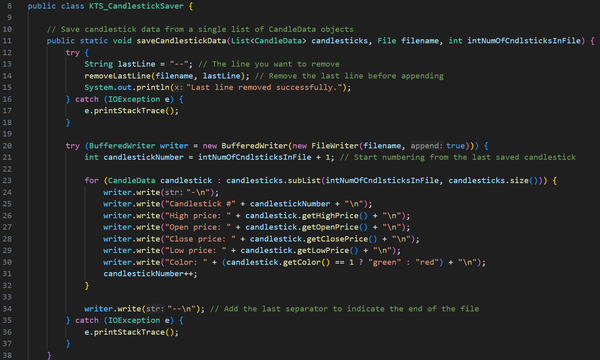

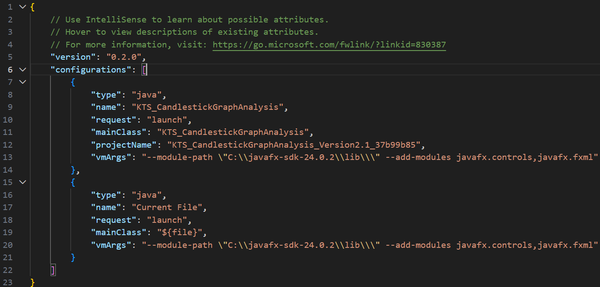

There were other improvements to the application, such as changing the text file format, introducing timestamps, improving data management, allowing candltick info to be added directly through the application, and improving read and write operations on the file, among other changes.

I have also significantly improved the User Interface using JavaFX, implementing a graph that users can interact with, as well as various fields and buttons for calculating swing points, reading and saving data to the file, and adding new candlesticks to the file.

However, testing the application was grueling because, at the time, I didn’t know of any free financial historical data sources, so I had to enter all the data manually. Even after I discovered free historical financial data, the application lost its meaning without live financial data, which is almost always behind a paywall. I also understood that heuristics are not very good at predicting volatile and adaptive financial markets. For these reasons, I stopped all work on this project. However, it taught me many things that I will carry with me throughout my professional career, allowed me to test my skills, and helped me apply my knowledge. Maybe in the future I might give it a second life.

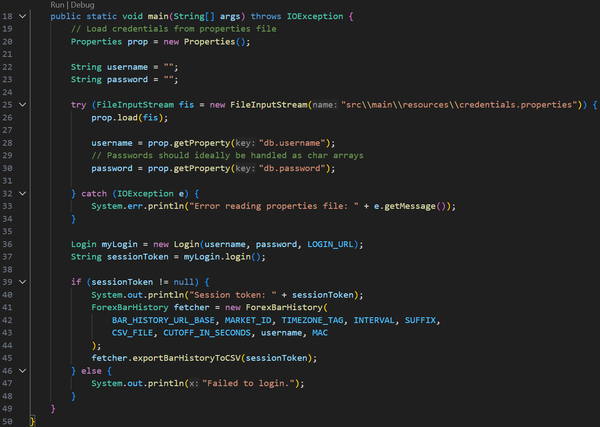

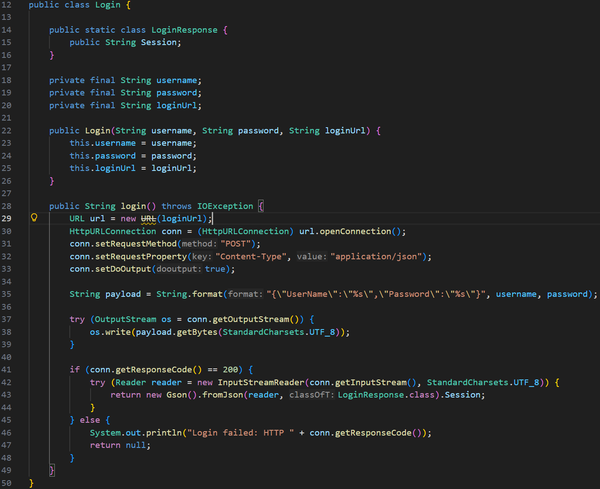

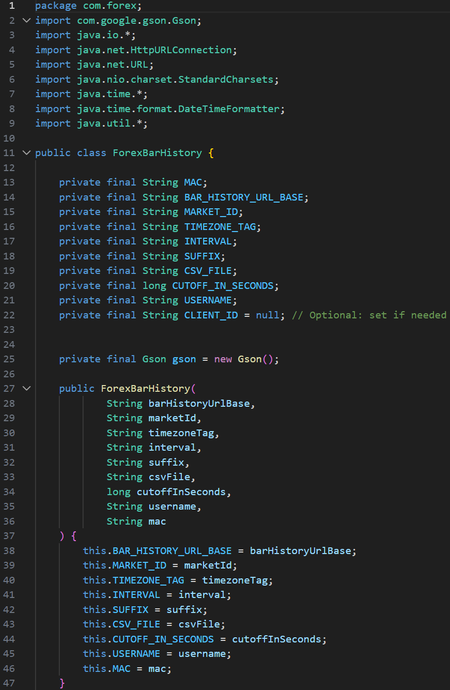

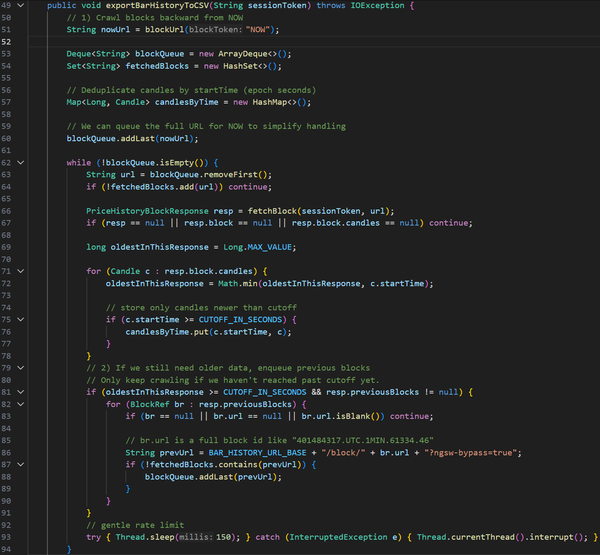

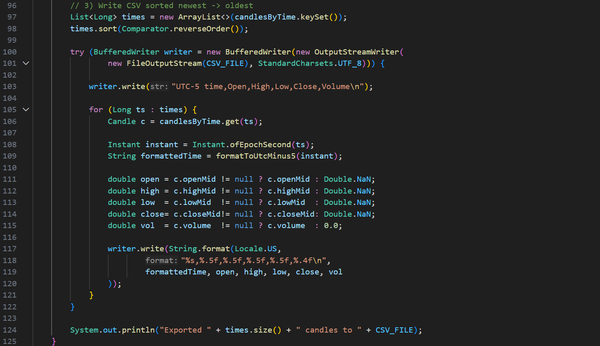

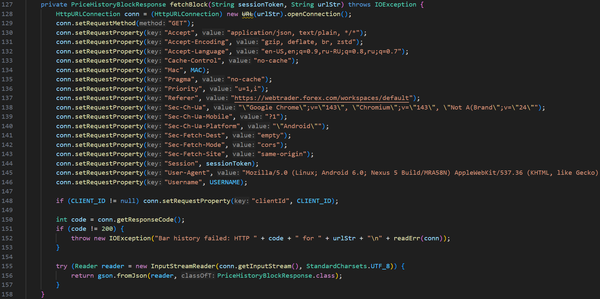

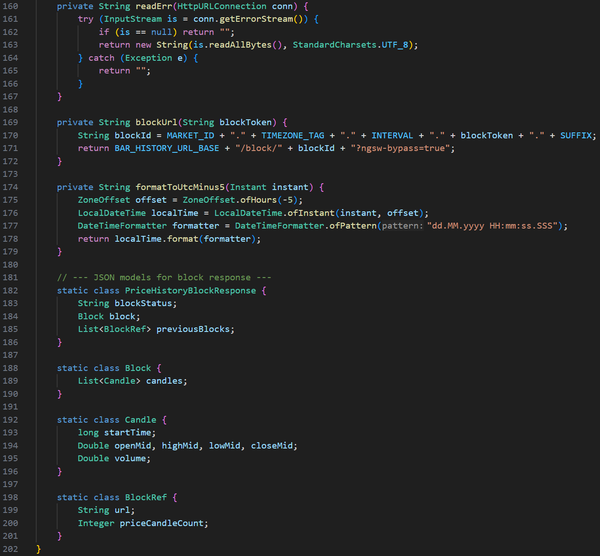

This project was developed strictly for educational and research purposes as part of my cybersecurity and defensive security training. The goal was to understand how automated tools may attempt to replicate legitimate front-end client behavior to bypass bot-detection systems, firewalls, and anti-abuse mechanisms.

All work was conducted in a controlled manner, with minimal data collection (under 10 MB), and without commercial use, redistribution, or external sharing. The scraper was not used to train machine learning models, generate revenue, or impact platform availability. The code is no longer functional due to subsequent changes in the target platform’s front-end and back-end logic.

This project was intended to strengthen my understanding of attacker methodologies and to design, evaluate, and implement more effective defensive security controls. As a cybersecurity practitioner, studying offensive techniques ethically and responsibly is essential for protecting systems, data, and organizational assets.

I do not condone unauthorized data access, misuse of automated tools, or violation of platform policies. This work reflects a commitment to responsible security research and ethical professional conduct.

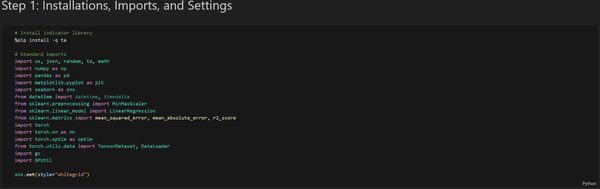

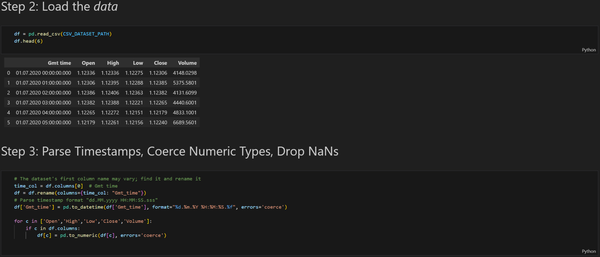

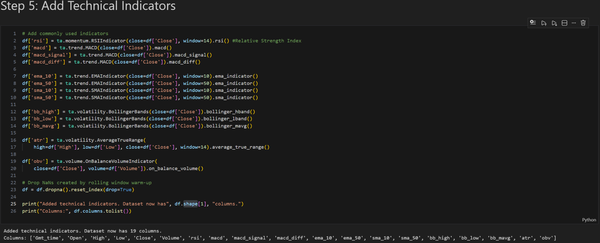

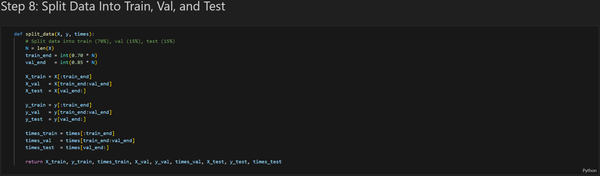

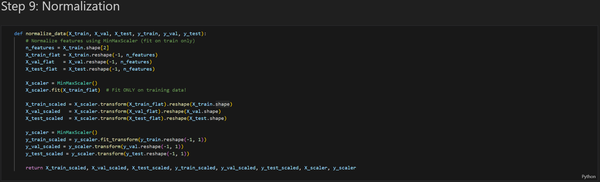

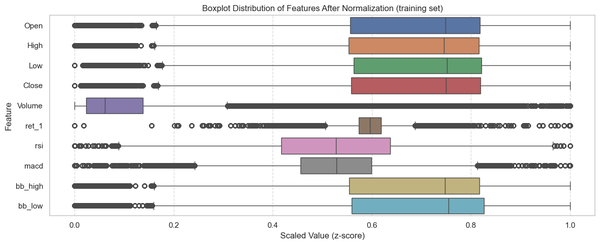

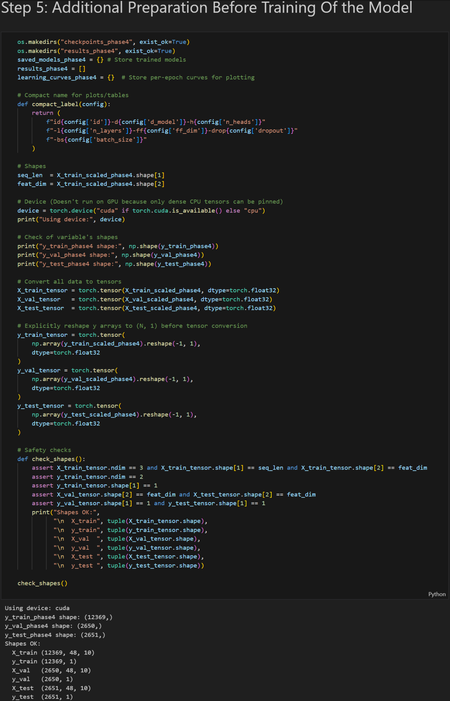

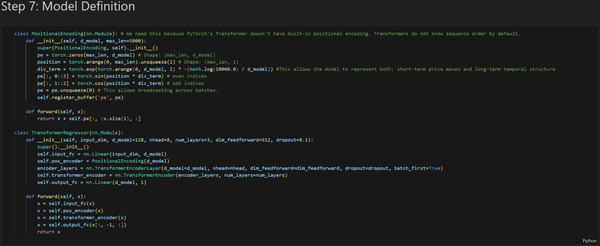

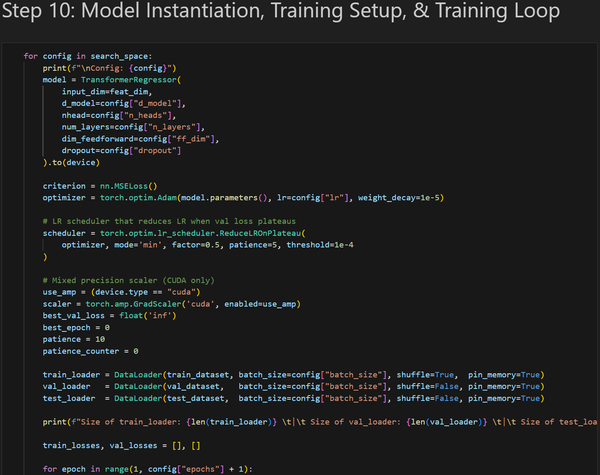

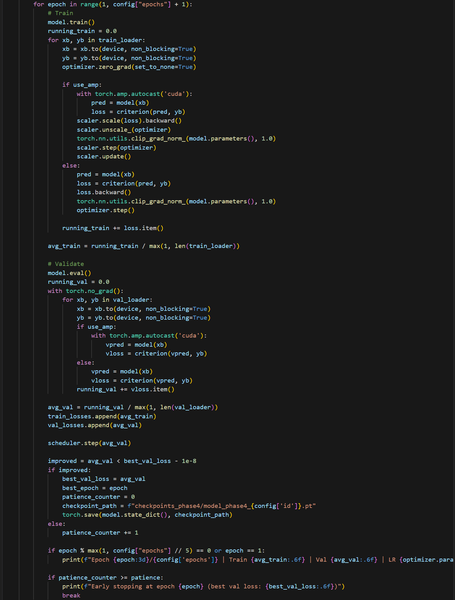

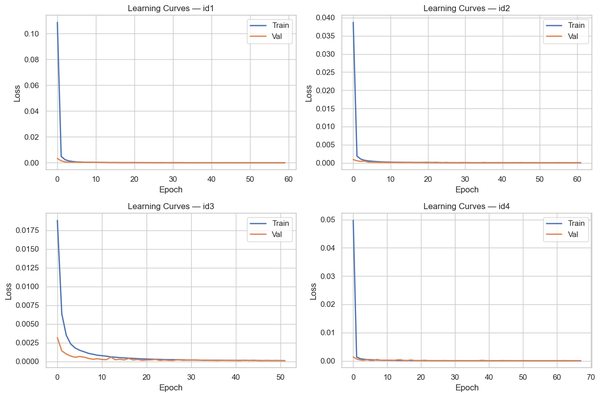

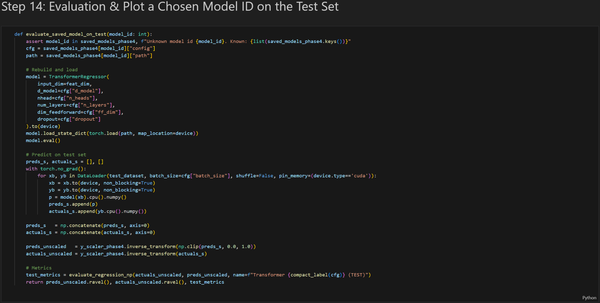

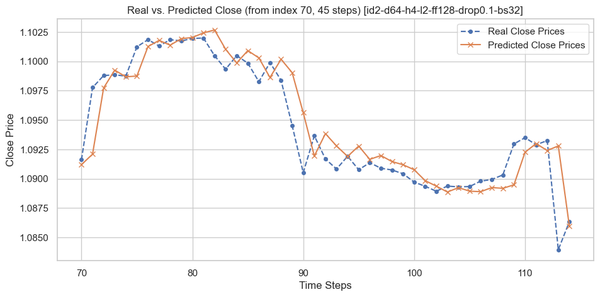

After my failed attempt to predict market movements using heuristics, I didn’t give up and continued to experiment, now with Artificial Intelligence. This project began at the end of September 2025 as part of the Introduction to Artificial Intelligence course’s semester project. Initially, the semester project was supposed to be a vanilla neural network for binary classification. Still, I decided to step out of my comfort zone and work with a Transformer model for a regression task. A big thank you to my teacher, Badri Adhikari, for allowing me to do this project and for guiding me through its completion.

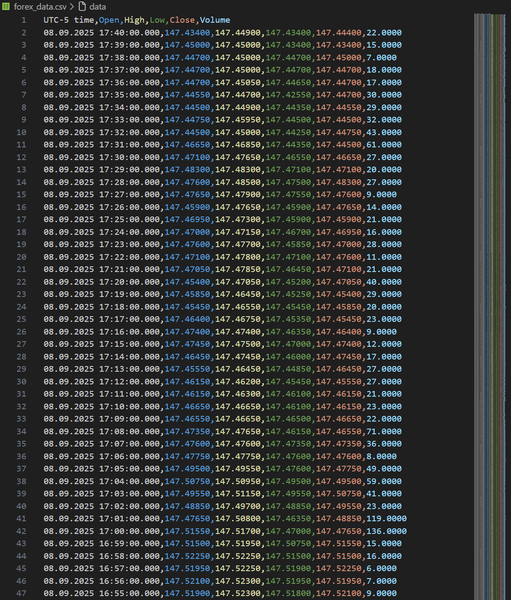

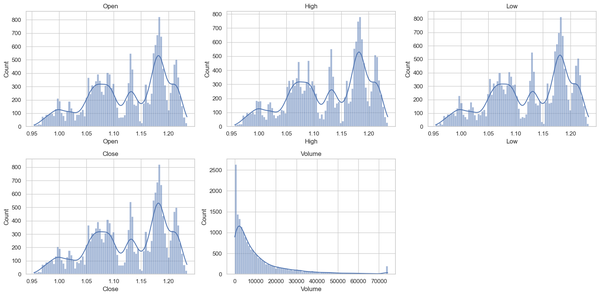

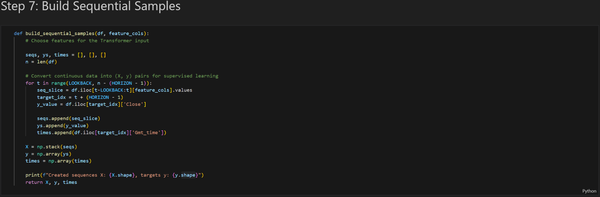

This project aims to develop a Transformer-based forecasting model for the EUR/USD currency pair using historical hourly candlestick data. The Transformer architecture was selected because it excels at learning long-term dependencies in sequential data. However, I have picked a relatively small dataset, consisting of 17,768 hourly candlesticks. A small data set was chosen for two reasons: I didn’t have the hardware to work with large datasets, and I didn’t have the time to train on them. An ideal dataset size for training a Transformer model would be at least 1,000,000 candlestick data points.

The main objective of the Transformer model is to predict the closing price of the following hourly candlestick using a 48-hour lookback window. My project consisted of four parts:

I would say that most of the time I have spent on this project was dedicated to learning. I never used libraries such as NumPy, Pandas, and PyTorch, which were the backbone of the project. However, I was actually glad that this project was a dive into the unknown for me. It pushed me to focus and engage in ultralearning. Without the project, the skills and knowledge I learned in 3 months would have most likely taken years to learn.

Besides the learning and hands-on benefits of this project, it laid a strong foundation for my future work in Artificial Intelligence. I specifically designed the entire project to be adaptive and to support work with larger datasets, greater variation in model architectures, and more in-depth feature engineering. I have gone to great lengths to optimize VRAM usage and enable training on NVIDIA GPUs. In the near future, I plan to use larger, open-source datasets of historical currency exchange rates to improve the transformer model’s performance.

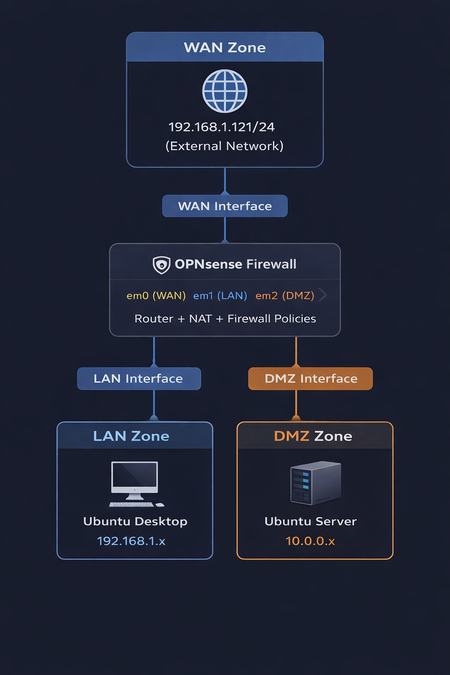

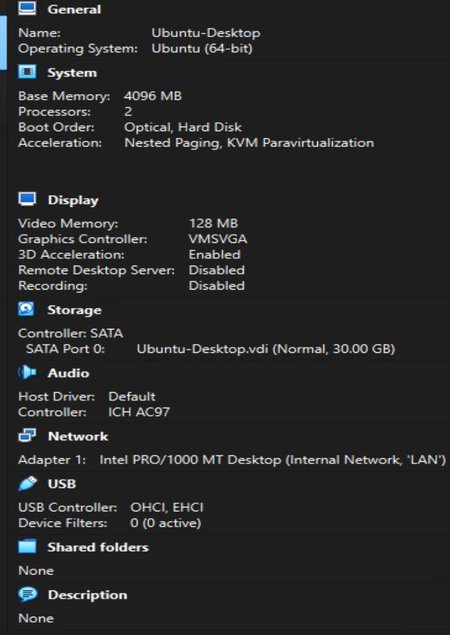

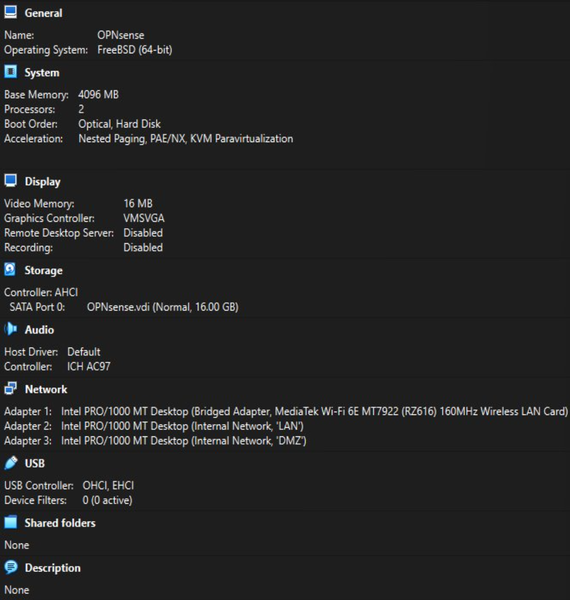

For this project, I worked in a group with two very talented Cybersecurity Specialists, Tony Huynh and Will Cuba. Together, we have created a small company network using virtual machines. The main idea was to create a small Security Operations Center (SOC) lab using virtual machines to monitor, detect, analyze, and respond to threats in real-time. Our main objective was to build skills, test tools, and gain hands-on experience configuring a firewall, performing log analysis, investigating alerts, conducting threat hunting, and responding to incidents in a safe, virtualized environment.

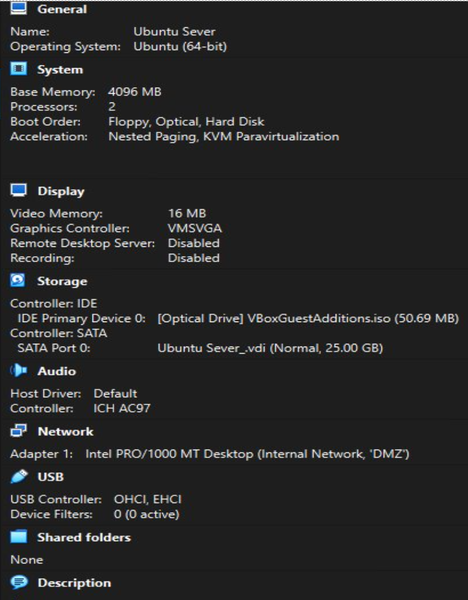

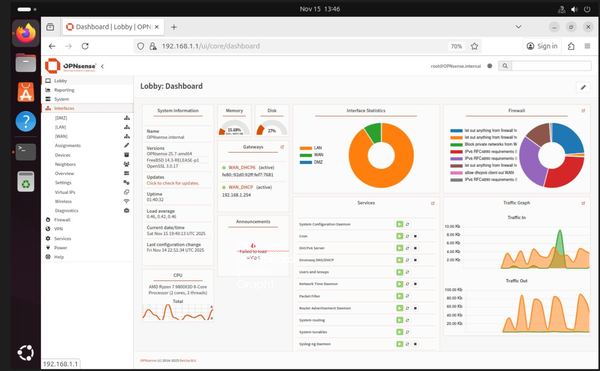

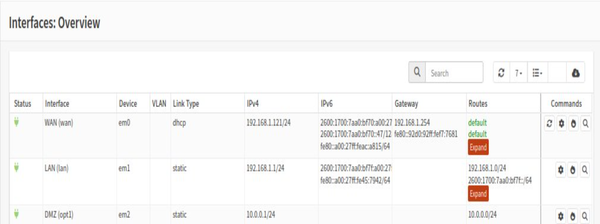

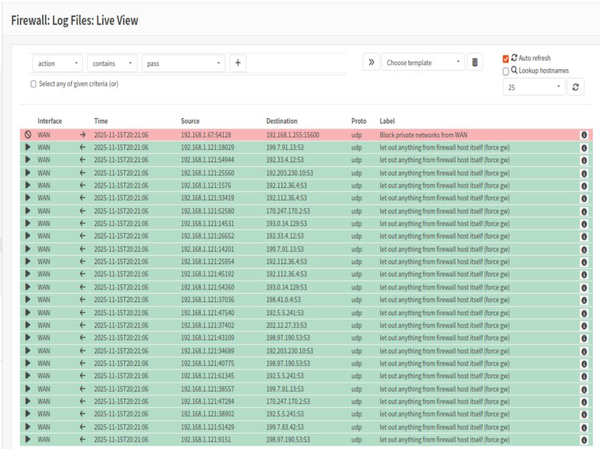

Our SOC lab consisted of three networks: WAN (the outside Internet), LAN (the internal office network), and DMZ (a special area for public servers). Network segmentation was done using OPNsense Firewall. The LAN was represented by Ubuntu Desktop, and the DMZ by Ubuntu Server.

OPNsense Firewall acted as the central router and security gateway. Firewall handled NAT, firewall rules, DHCP, and inter-zone routing. The purpose of Ubuntu Server was to host services in an isolated zone. Ubuntu Desktop was used to monitor, administer, and test connectivity. Tools such as the browser, ping, traceroute, and Wireshark were run on Ubuntu Desktop. Ubuntu Desktop was used on an internal employee workstation.

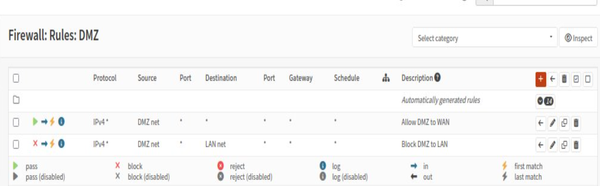

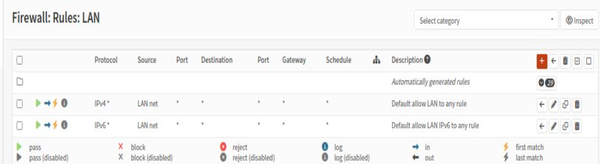

Firewall configuration:

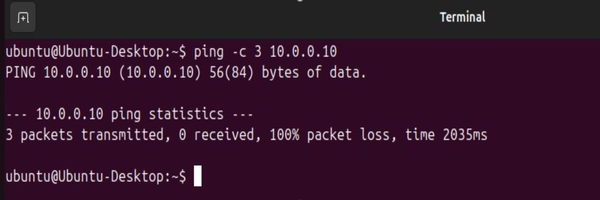

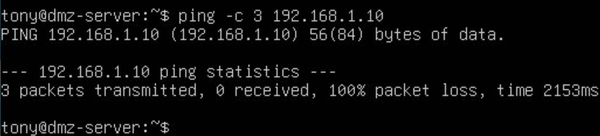

We have verified the connection and firewall rules using Ping, Traceroute, and the OPNsense dashboard.

After we have set everything up, we have conducted deep penetration testing and incident response. We divided our team into two groups: one group of two people was the attackers, and the other was a single person who was the incident response team. Attackers used Metasploit and Nmap to conduct reconnaissance and launch the attack from the WAN. The incident response team used OPNsense Logs and Wireshark to detect the intrusion and reconnaissance attempts.

Personally, I have learned a lot in this project. The areas where I have gained the most knowledge are setting up virtual machines in VirtualBox and configuring firewall rules. It truly was a profound experience, where I got to work in a team on a hands-on project and share our expertise, knowledge, and skills.

As you might have already noticed, I am sweet on Trading Markets. When I discovered that I could get live financial data in code without any special wizardry like scraping, APIs, or web crawling at an affordable price, I couldn’t walk past it. This magical place was called TradingView, where users could write custom scripts in the Pine Script language.

Over the winter break between 2025 and 2026, I learned the language and started brainstorming and creating indicators. Eventually, I became so good at it that I decided to start client scripting. Several of my works are shown below.

I always wanted to have my own Homelab. Homelab is not just interconnected hardware; it is freedom for any technology enthusiast. I wanted that freedom so much that, as soon as I had enough money from my part-time job, I invested almost all of it in building my homelab. The current total invested in the homelab is about $ 5,200. However, every penny spent was worth it.

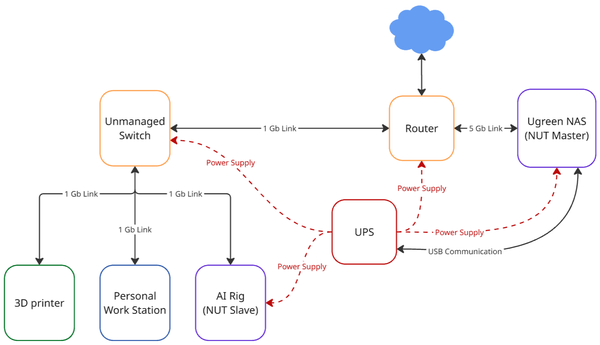

My home lab consists of a router, a 6-port 1 GB unmanaged switch, CyberPower CP1500PFCLCD UPS, UGREEN DXP4800 Plus with two 30TB disks, a custom AI rig, a 3D printer, and my personal workstation. The lab was designed around two high-priority machines, AI Rig and NAS. The resources were strategically used to provide those two machines with everything they needed for continuous operation, resilience to unexpected power events, and hardware/data safety.

I chose this layout for my homelab for several good reasons. Firstly, AI Rig is prioritised because it runs critical processes, such as my personal AI training and rental jobs on Salad, where constant power and internet are essential; otherwise, progress or a job might be lost. Second, NAS is prioritised because it is not used just by me but also by several other people who rely heavily on it. I chose not to connect the NAS to the unmanaged switch because it is heavily dependent on the internet speeds. NAS supports up to 10 GB internet speeds. The highest-speed port available to me on the router was a 5 Gb port. Therefore, I connected it to the router. Another reason I have prioritized AI Rig and Ugreen NAS over other components is that that is where most of the capital is sitting, and in the case of a power surge, I would not want those machines to be at risk.

Overall, this homelab represents both a technical achievement and a long-term personal investment in my growth as a cybersecurity and systems professional. It allows me to experiment, learn, and operate real-world infrastructure in a controlled environment while developing practical skills in networking, power management, automation, and system reliability. More than just a collection of devices, this homelab is a continuously evolving platform that supports my academic, professional, and entrepreneurial goals, and it reflects my commitment to building resilient, high-performance systems from the ground up.